Algorithm Death: As we head towards an immersive life in the Metaverse, alarms are blaring. Tech companies need to get their algorithms in order, or this could be a risky future for some.

While we aren’t at Web3 yet, problems with Web2 are still incredibly concerning. While algorithms made by big tech can be good for society, like ones that predict crime in advance, there are others that are a total disaster.

Coroner Andrew Walker identified the cause of death of 14-year-old Molly Rose Russell as the act of self-harm. He said this was caused by depression and the negative impact of online content.

Walker said that “it would not be safe for us to acknowledge suicide as the cause of death.”

Algorithm Death: Instagram and Pinterest

The expert said that Instagram and Pinterest used algorithms that selected and shared inappropriate content with the student without her requesting the information. Walker reports, “Some content romanticized young people’s acts of self-harm, while others promoted isolation and made it impossible to discuss the problem with people who might help.”

According to The Guardian, Russell saved, liked, or posted more than 2,000 Instagram posts in 2017 on the eve of her death. They were all associated with suicide, depression or self-harm. The girl also watched 138 films of a similar nature. Among them were episodes of the series 13 Reasons Why for people 15+ and 18+.

A child psychiatrist said during the hearing that after analyzing what Russell had watched shortly before her death, he was unable to sleep soundly for weeks.

“10 Depression Pins You May Like”

Investigators found hundreds of photos of self-harm and suicide on the girl’s Pinterest account. It also turned out that the platform sent the students e-mails recommending inappropriate content. Some of them had headers said, “10 Depression Pins You May Like.”

It is likely that the material viewed by Molly, already suffering from a depressive disorder and vulnerable to age-related injuries, had a negative effect on her. It is also likely to have contributed more than minimally to the child’s death.

Meta and Pinterest officials apologized and admitted that Russell encountered content on the platforms that should not be there. A Meta spokesperson said, “We want Instagram to provide a positive experience for everyone, especially teenagers. We’ll take a close look at the coroner’s full report when he delivers it.”

Pinterest has stated that it is constantly improving the platform and is committed to ensuring security for all.

Other cases

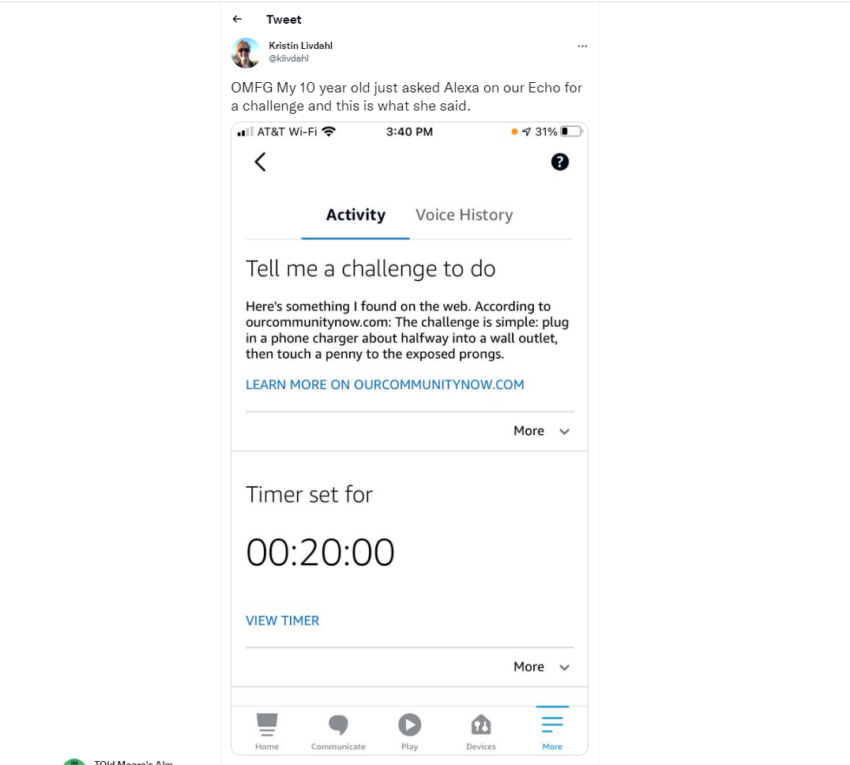

TikTok was sued in May over alleged “lethal recommendations” of its algorithms. In December 2021, Amazon’s virtual assistant Alexa proposed a fatal challenge to a 10-year-old child.

It’s a brave new world, but also a scary one. Big tech cannot be complacent about the future role they have in this issue.

Got something to say about the algorithm death or anything else? Write to us or join the discussion in our Telegram channel. You can also catch us on Tik Tok, Facebook, or Twitter.

Disclaimer

All the information contained on our website is published in good faith and for general information purposes only. Any action the reader takes upon the information found on our website is strictly at their own risk.

Be the first to comment